Project 1:Bias Detection and Mitigation

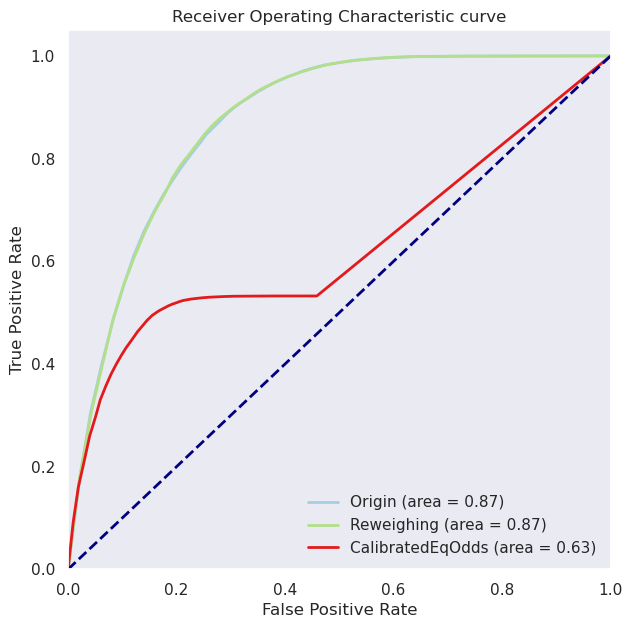

Today, more decisions are being handled by artificial intelligence (AI) and machine learning (ML) algorithms, with increased implementation of automated decision-making systems in different applications. Unfortunately, ML algorithms are only sometimes as ideal as we would expect. The research has shown a model can perform differently for distinct groups within your data. Those groups might be identified by protected or sensitive characteristics, such as race, gender, age, and veteran status. In this paper, we investigate various methods for detecting, understanding, and mitigating unwanted algorithmic bias consisting of: Analyzing the dataset by Exploratory Data Analysis (EDA) and visualizing the sensitive features to check the possibility of fairness issues in the dataset. Detecting bias by employing a comprehensive set of metrics introduced by the AIF360 toolkit. Mitigating Bias through various preprocessing, in-processing, and post-processing bias mitigation algorithms. Evaluating the performance of these algorithms by comparing the impact of methods on the initial accuracy and F1-Score of the model. Applying the mentioned methods might lead to decreasing the original accuracy of a model. In this regard, the Prejudice Remover Regularization method, as an in processing mitigation bias, leads to the most fairness but not the best accuracy. However, some approaches are more efficient in maintaining the initial accuracy while reducing bias. To be more accurate, Reweighting, as a preprocessing mitigation bias method, had the best performance in improving fairness with an accuracy o f 0.9298 and an F1-score of 0.9614.